Recently I've been trying to do more journalism lectures and less actual journalism. 1. Investigative journalism turns out to be quite dangerous and 2. I'm sick of pitching editors about things I, (and millions of others) think are important, that they do not.

My merits are experience, going back to my brief time as a foreign correspondent and breaking news reporter, 15 years ago. However my lack of academic qualifications can be a hurdle. Thankfully, I have a couple of regular clients at London universities, and secondary schools.

In pursuit of more engagements, I began to include in my pitch, that I can teach about my one specific use of Artificial Intelligence when doing investigative journalism.

I got a response from a university who, having read my email, grasped on to my mention of AI. They asked if I could also talk about the ethical principles of AI, and LLM fact checking.

The problem is, I don't think AI has any ethical uses, even in investigative journalism and it’s even worse at fact checking. So though I needed the fee, I declined.

On Thursday 3rd April, the Society of Authors held a protest outside META's London office in King's Cross about their use of AI.

Their call to action said: Stand with us this Thursday. Millions of books have allegedly been stolen by the #MetaBookTheives and used without permission or compensation to create a digital monster that threatens the future of every writer in the UK. This is a major attack by Meta on the livelihoods of creators. Help make us heard.

A noble cause. Who could disagree with their aim? Not me.

The call to action was, it seemed to be prompted by publication of a searchable database where authors can check if their work has been sucked up into the AI powered, ‘digital monster’. The database is called 'Library Genesis' or LibGen and includes over 7.5 million books and 81 million research papers.

In their AI series the Atlantic reported that court documents showed that staff at Meta discussed licensing books and research papers lawfully, but instead chose to use stolen work because it was faster and cheaper.

Authors I knew, and some I didn't posted screenshots of their results, accompanied with words of anger, disgust and dismay on social media platforms, including on Facebook. Ironic.

I myself even posted this:

I wondered however, why I had never or rarely seen any of these authors post about other social ills or indeed the ongoing genocides in Gaza or Sudan.

I wondered if they were aware of the phrase, “Progressive except Palestine”? How could they not be?

It’s ‘A phrase that refers to organisations or individuals who describe themselves politically as progressive, liberal, or left-wing but who do not express pro-Palestinian sentiment or do not comment on the Israeli’, apartheid and now genocide.

Were the authors afraid to sully their brands or negatively impact their sales?

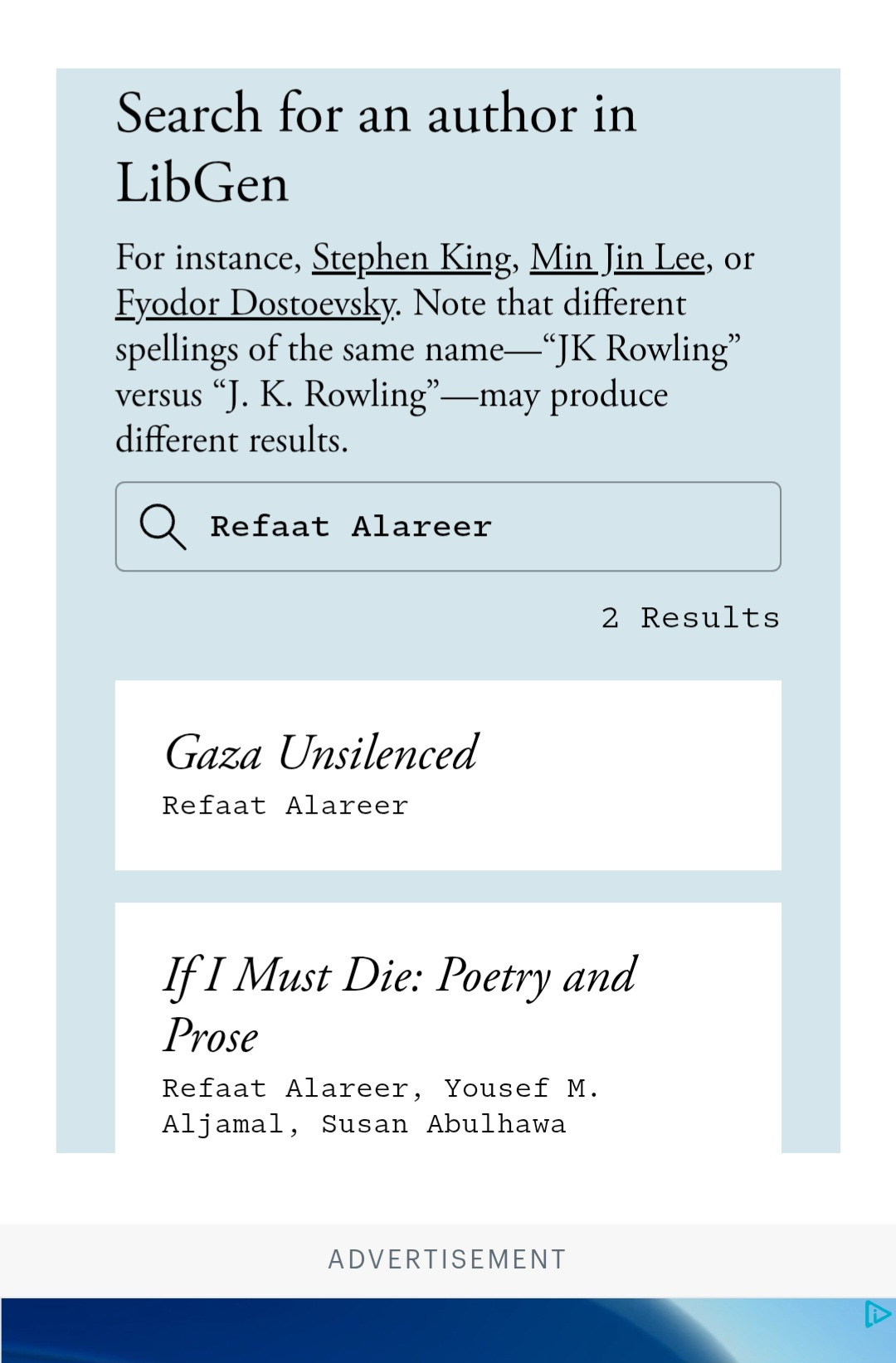

I went on to the Atlantic website and used my one free article to check the name of the revered, and now dead Palestinian poet and academic Reefat Alareer. He and some of his family members were targeted by an Israeli missile on the 6th December 2023. Here are his results:

I was not surprised to find that his last published words had also been sucked up by the conglomerate. After all what do AI companies care about the genocide that is dominating social media feeds? It's just more grist for the AI content mill.

Last year an investigation published in +972 magazine revealed that Israel was using AI targeting systems to identify tens of thousands of people in Gaza.

“Lavender”, is an AI recommendation system that uses algorithms to identify enemies as targets. “Where’s Daddy?” is ‘a system which tracks targets geographically so that they can be followed into their family residences before being attacked. Together, these two systems constitute an automation of the find-fix-track-target components of what is known by the modern military as the “kill chain.’

The writer Linda Mamoun with that in mind and what looked to me to be a plea to the people who have yet to comment or protest against Israel’s genocide, but who had taken to the barricades for their own self interest said,

“You might not care that Israel is committing genocide in Gaza. But here’s something you should know: Israel is using AI to target millions of civilians without any human oversight.

This is not science fiction. Genocidal Israelis programmed this AI to fulfill their genocidal intent—and they’ve paved the way for every country to use AI for killing. The genocide Israel is committing in Gaza will be replicated in other places. You might not care until it’s used on you.”

On Friday, Ibtihal Aboussad a software engineer and member of Microsoft’s AI Platform team, confronted their AI CEO Mustafa Suleyman during a speech. She shouted “Shame on you,”. “You are a war profiteer. Stop using AI for genocide.” Before being escorted out.

Shortly after she sent a company wide email in which she said,

For the past 1.5 years, I’ve witnessed the ongoing genocide of the Palestinian people by Israel. I’ve seen unspeakable suffering amidst Israel’s mass human rights violations—indiscriminate carpet bombings, the targeting of hospitals and schools, and the continuation of an apartheid state—all of which have been condemned globally by the UN, ICC, and ICJ, and numerous human rights organizations.

All the while, our “responsible” AI work powers this surveillance and murder.

We are complicit

When I moved to AI Platform, I was excited to contribute to cutting-edge AI technology and its applications for the good of humanity: accessibility products, translation services, and tools to “empower every human and organization to achieve more.”

I was not informed that Microsoft would sell my work to the Israeli military and government, with the purpose of spying on and murdering journalists, doctors, aid workers, and entire civilian families.

Edited for brevity. Full email here.

I did not sign up to write code that violates human rights.

- Ibtihal Aboussad

Whilst Israel has used Artificial Intelligence for grotesque and genocidal aims, it's not the only use of AI that targets oppressed and marginalised people.

Only this week the Voice newspaper revealed that The Met police had used AI facial recognition in Croydon, an area of London with one of the highest proportion of Black people to scan 128,518 faces for just 133 arrests.

‘This means that over 120,000 people in Croydon were tracked by the police for no reason at all.’

The problem here is that Police facial recognition technology can’t tell Black people apart. And AI-powered facial recognition leads to increased racial profiling.

I sent the Voice newspaper article to.a friend who works in a school in Croydon. He said, 'You'd think they'd have learnt from the hand dryers'.

I replied, 'Those things still fuck me over, that and automatic taps!’. For those that are unaware, AI has a problem with black skin in general. So, I and millions of other black people have to wave our hands about for a stupid amount of time in order to get water out of taps, and hand dryers to work.

A report by Techtonic Justice found that while there are high profile uses of AI such as the #MetaBookThieves campaign that have found widespread coverage in the media, there are many other lesser known uses.

‘The use of artificial intelligence, or AI, by governments, landlords, employers, and other powerful private interests restricts the opportunities of low-income people in every basic aspect of life’ in the U.S.

With Keir Starmer's January 2025 proclamation that Labour plans to “turbocharge” the use of artificial intelligence, it looks like the UK will be going the way of the U.S.

Techtonic Justice, which was founded by the lawyer Kevin de Liban, reported that in housing, 39.8 million low-income people are exposed to AI-related decision-making through landlords’ use of background screening, and more are exposed through the use of rent-setting algorithms and ubiquitous surveillance technologies.

That 2 million low-income people domestic violence victims, are exposed to AI-related decision-making through police departments’ use of AI to assess the risk of further violence to survivors.

In employment, 32.4 million low-wage workers are exposed to AI in the context of work, such as AI-related decision-making through employers’ use of AI to determine who gets hired, to set workers’ pay, and to surveil and manage them.

There's much much more in their report.

In February the Department for Works and Pensions (DWP) announced that they had been using AI to inform decisions on whether to approve or deny some benefit applications since July 2020 and had allocated a further £10 million to explore and implement AI solutions.

They claimed to have a 87% correct prediction rate. They also admitted to some teething problems. The system’s developers confessed that the first version of the tool used from 2020 to 2024 only achieved a 35% correct match rate, leaving 65% to be corrected by an agent.

They did not refer to the facts revealed by The Guardian in December 2024 that a machine-learning program used by the DWP to detect welfare fraud had shown bias according to factors like, age, disability and nationality. An internal assessment of the tool said that a “statistically significant outcome disparity” was present in its result.’

In February this year, Joshua Rozenberg who publishes A Lawyer Writes, daily, reported that in a speech, the head of the civil service announced,

“Lawyers must embrace AI.” BECAUSE 'AI creates work for lawyers: one of the biggest fields of legal activity in years to come is likely to be claims for the negligent or inappropriate use or failure to use AI.’

Sir Geoffrey Vos also said, ‘Those we serve are using it: all other industrial, financial and consumer sectors will be using AI at every level’.

Last week the non-profit newsroom, More Perfect Union reported that data centers powering AI like ChatGPT could triple their electricity use by 2028.

They went to rural Georgia to find out, “the true cost of the AI revolution”:

And reported that the Trump administration is investing over $1 trillion into new data centers in the next five years.

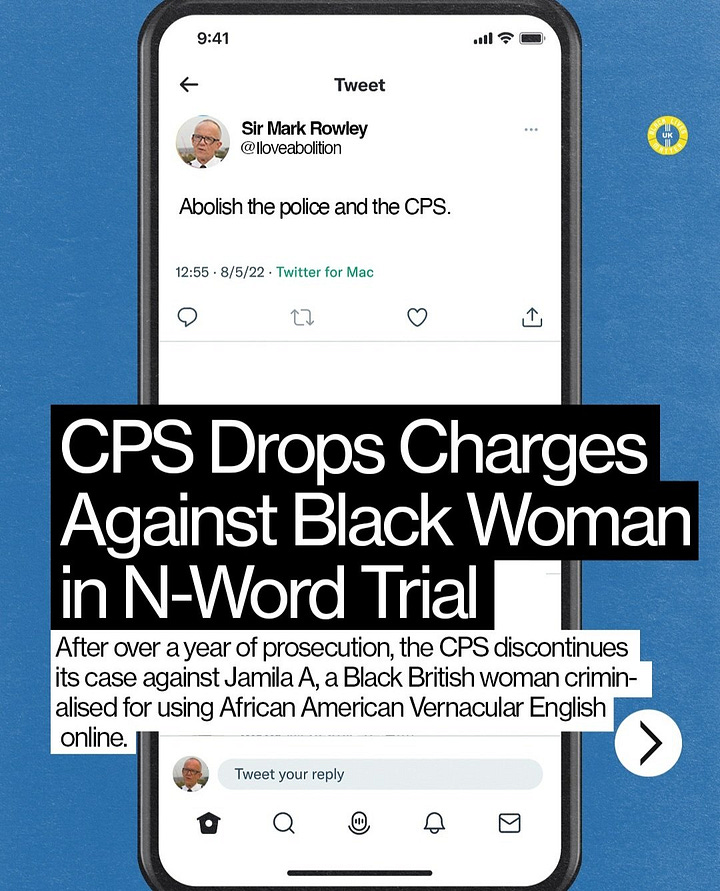

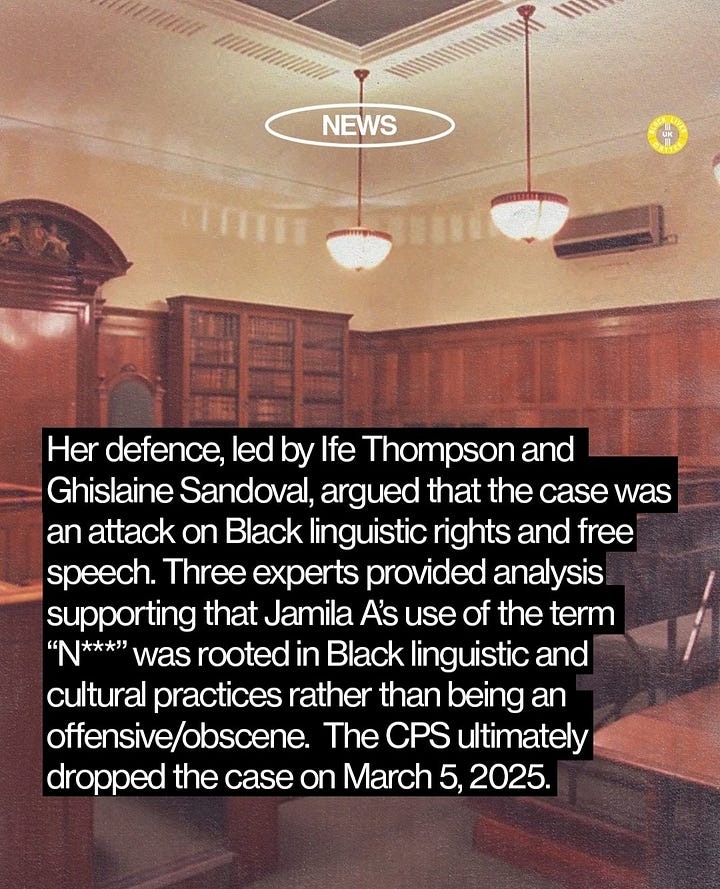

Last year a black woman was prosecuted by the CPS for using the N word (to another black woman, seemingly friend on X/Twitter. At the time I had no idea that this was all triggered by an AI moderation system. The charges were dropped last month.

Nonetheless, what a waste of time and money. And more importantly, what a way to cause unnecessary stress and fear for a young black woman.

Whilst not exhaustive the list above already begins to describe a world that has been and is being created by Artificial Intelligence and those that profit from it. OpenAI will be doing $12.7 billion revenue this year. Meta has a market cap of $1.51 trillion.

So back to the authors and their #MetaBookTheives campaign. I wish them luck. Fighting for yourself and your own rights can be exhausting.

Imagine if authors, a privileged class (yes you are), were also willing to fight for other marginalised people who have been targeted by AI use?

The Society of Authors call to action asked the public to ‘help us be heard’. Whilst they voted against a motion demanding an official statement condemning Israel's military action in Gaza in May, they could still help the voices of authors, creators and artists who Israel has killed be heard. And try to, even if ultimately futile prevent the deaths of those who haven’t.

Lebanese-American author and mathematical statistician Nassim Taleb wrote this on X/Twitter recently:

If an author or a public figure is too scared or too dependent to afford to speak out on Gaza & Palestine, their entire body of work is suspicious and, practically, useless. For they are most certainly subservient to dominant establishments in other things as well.

It is a powerful test because, owing to the imbalance of power, it is a typically a "declaration against interest."

~ Nassim Taleb

Much to reflect on.

A list of some of my more popular newsletters published recently:

On Violence and The Wretched of the Earth

There Are No More Black Radicals

Exclusive: Do the Security Services have total impunity?

Political Correctness Gone MAAAADDDD!!

P.S I know there is going to be some non-black women and /or middle class women who try to take my experiences and use them as their own. Trust me, I will hunt you down if you do and you will catch these hands.